In my last post, I went over some of the

basics of the Web Audio API and showed you how to generate

sine waves of various frequencies and amplitudes. We were introduced to some key

Web Audio classes, such as

AudioContext,

AudioNode, and

JavaScriptAudioNode.

This time, I'm going to go take things a little further and build a realtime spectrum analyzer with Web Audio and HTML5 Canvas. The final product plays a remote music file, and displays the frequency spectrum overlaid with a time domain graph.

The demo is here:

JavaScript Spectrum Analyzer. The code for the demo is in my

GitHub repository.

The New Classes

In this post we introduce three new Web Audio classes:

AudioBuffer,

AudioBufferSourceNode, and

RealtimeAnalyzerNode.

An

AudioBuffer represents an in-memory audio asset. It is usually used to store short audio clips and can contain multiple channels.

An

AudioBufferSourceNode is a specialization of

AudioNode that serves audio from

AudioBuffers.

A

RealtimeAnalyzerNode is an

AudioNode that returns time- and frequency-domain analysis information in real time.

The Plumbing

To begin, we need to acquire some audio. The API supports a number of different formats, including MP3 and raw PCM-encoded audio. In our demo, we retrieve a remote audio asset (an MP3 file) using AJAX, and use it to populate a new

AudioBuffer. This is implemented in the

RemoteAudioPlayer class (

js/remoteaudioplayer.js) like so:

RemoteAudioPlayer.prototype.load = function(callback) {

var request = new XMLHttpRequest();

var that = this;

request.open("GET", this.url, true);

request.responseType = "arraybuffer";

request.onload = function() {

that.buffer = that.context.createBuffer(request.response, true);

that.reload();

callback(request.response);

}

request.send();

}

Notice that the

jQuery's AJAX calls aren't used here. This is because jQuery does not support the

arraybuffer response type, which is required for loading binary data from the server. The

AudioBuffer is created with the

AudioContext's

createBuffer function. The second parameter,

true, tells it to mix down all the channels to a single mono channel.

The

AudioBuffer is then provided to an

AudioBufferSourceNode, which will be the context's audio source. This source node is then connected to a

RealTimeAnalyzerNode, which in turn is connected to the context's destination, i.e, the computer's output device.

var source_node = context.createBufferSource();

source_node.buffer = audio_buffer;

var analyzer = context.createAnalyser();

analyzer.fftSize = 2048; // 2048-point FFT

source_node.connect(analyzer);

analyzer.connect(context.destination);

To start playing the music, call the

noteOn method of the source node.

noteOn takes one parameter: a timestamp indicating when to start playing. If set to

0, it plays immediately. To start playing the music 0.5 seconds from now, you can use

context.currentTime to get the reference point.

// Play music 0.5 seconds from now

source_node.noteOn(context.currentTime + 0.5);

It's also worth noting that we specified the granularity of the FFT to 2048 by setting the

analyzer.fftSize variable. For those unfamiliar with DSP theory, this breaks the frequency spectrum of the audio into 2048 points, each point representing the magnitude of the

n/2048th frequency bin.

The Pretty Graphs

Okay, it's now all wired up -- how do I get the pretty graphs? The general strategy is to poll the analyzer every few milliseconds (e.g., with

window.setInterval), request the time- or frequency-domain data, and then render it onto a HTML5 Canvas element. The analyzer exports a few different methods to access the analysis data:

getFloatFrequencyData,

getByteFrequencyData,

getByteTimeDomainData. Each of these methods populate a given

ArrayBuffer with the appropriate analysis data.

In the below snippet, we schedule an

update() function every 50ms, which breaks the frequency-domain data points into 30 bins, and renders a bar representing the average magnitude of the points in each bin.

canvas = document.getElementById(canvas_id);

canvas_context = canvas.getContext("2d");

function update() {

// This graph has 30 bars.

var num_bars = 30;

// Get the frequency-domain data

var data = new Uint8Array(2048);

analyzer.getByteFrequencyData(data);

// Clear the canvas

canvas_context.clearRect(0, 0, this.width, this.height);

// Break the samples up into bins

var bin_size = Math.floor(length / num_bars);

for (var i=0; i < num_bars; ++i) {

var sum = 0;

for (var j=0; j < bin_size; ++j) {

sum += data[(i * bin_size) + j];

}

// Calculate the average frequency of the samples in the bin

var average = sum / bin_size;

// Draw the bars on the canvas

var bar_width = canvas.width / num_bars;

var scaled_average = (average / 256) * canvas.height;

canvas_context.fillRect(i * bar_width, canvas.height, bar_width - 2,

-scaled_average);

}

// Render every 50ms

window.setInterval(update, 50);

// Start the music

source_node.noteOn(0);

A similar strategy can be employed for time-domain data, except for a few minor differences: Time-domain data is usually rendered as waves, so you might want to use lot more bins and plot pixels instead of drawing bars. The code that renders the time and frequency domain graphs in the demo is encapsulated in the

SpectrumBox class in

js/spectrum.js.

The Minutiae

I glossed over a number of things in this post, mostly with respect to the details of the demo. You can learn it all from the source code, but here's a summary for the impatient:

The graphs are actually two HTML5 Canvas elements overlaid using CSS absolute positioning. Each element is used by its own

SpectrumBox class, one which displays the frequency spectrum, the other which displays the time-domain wave.

The routing of the nodes is done in the

onclick handler to the

#play button -- it takes the

AudioSourceNode from the

RemoteAudioPlayer, routes it to node of the frequency analyzer, routes

that to the node of the time-domain analyzer, and then finally to the destination.

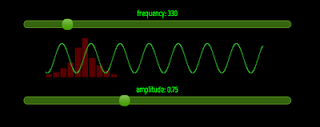

Bonus: Another Demo

That's all folks! You now have the knowhow to build yourself a fancy new graphical spectrum analyzer. If all you want to do is play with the waves and stare at the graphs, check out my other demo: The

Web Audio Tone Analyzer (source). This is really just the same spectrum analyzer from the first demo, connected to the tone generator from the

last post.

References

As a reminder, all the code for my posts is available at my GitHub repository:

github.com/0xfe.

The audio track used in the demo is a discarded take of

Who-Da-Man, which I recorded with my previous band

Captain Starr many many years ago.

Finally, don't forget to read the

Web Audio API draft specification for more information.

Enjoy!

Example doesn't goes past "loading" in most recent chrome on osx.

ReplyDeleteSame here. Have chrome on both osx and windows

ReplyDeleteTurn on Web Audio in about:flags in chrome

ReplyDeleteworks great on my chrome!love it!

ReplyDeleteVery cool.

ReplyDeleteThis is by far the best description of Web Audio anywhere... thanks!

ReplyDeleteThanks for this article. Very clear and well written. It helped me a lot.

ReplyDeleteThis looks very interesting but http://0xfe.blogspot.com/2011/08/web-audio-spectrum-analyzer.html does nothing and http://0xfe.muthanna.com/wavebox/ never gets past "Loading". Any help would be appreciated.

ReplyDeleteyour code example of The Pretty Graphs is missing a } after the canvas_context.fillRect line.

ReplyDelete