The Web Audio API is a W3C draft standard interface for building in-browser audio applications. Although the draft is well specified, it is almost impossible to find useful documentation on building applications with it.

In my quest to deeper understand HTML5 audio, I spent some time figuring out how the API works, and decided to write up this quick tutorial on doing useful things with it.

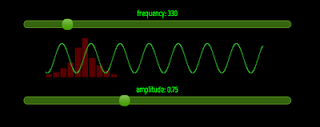

We will build a sine wave tone-generator entirely in JavaScript. The final product looks like this:

Web Audio Tone Generator.

The full code is available in my GitHub repository:

https://github.com/0xfe/experiments/tree/master/www/tone

Caveats

The Web Audio API is draft, is likely to change, and does not work on all browsers. Right now, only the latest versions of Chrome and Safari support it.

Onwards We Go

Getting started making sounds with the Web Audio API is straightforward so long as you take the time to study the plumbing, most of which exists to allow for real-time audio processing and synthesis. The complete specification is available on the

W3C Web Audio API page, and I'd strongly recommend that you read it thoroughly if you're interested in building advanced applications with the API.

To produce any form of sound, you need an

AudioContext and a few

AudioNodes. The

AudioContext is sort of like an environment for audio processing -- it's where various attributes such as the sample rate, the clock status, and other environment-global state reside. Most applications will need no more than a single instance of

AudioContext.

The

AudioNode is probably the most important component in the API, and is responsible for synthesizing or processing audio. An

AudioNode instance can be an input source, an output destination, or a mid-stream processor. These nodes can be linked together to form processing pipelines to render a complete audio stream.

One kind of

AudioNode is

JavaScriptAudioNode, which is used to generate sounds in JavaScript. This is what what we will use in this tutorial to build a tone generator.

Let us begin by instantiating an

AudioContext and creating a

JavaScriptAudioNode.

var context = new webkitAudioContext();

var node = context.createJavaScriptNode(1024, 1, 1);

The parameters to

createJavaScriptNode refer to the buffer size, the number of input channels, and the number of output channels. The buffer size must be in units of sample frames, i.e., one of: 256, 512, 1024, 2048, 4096, 8192, or 16384. It controls the frequency of callbacks asking for a buffer refill. Smaller sizes allow for lower latency and higher for better overall quality.

We're going to use the

JavaScriptNode as the source node along with a bit of code to create sine waves. To actually

hear anything, it must be connected to an output node. It turns out that

context.destination gives us just that -- a node that maps to the speaker on your machine.

The SineWave Class

To start off our tone generator, we create a

SineWave class, which wraps the

AudioNode and wave generation logic into one cohesive package. This class will be responsible for creating the

JavaScriptNode instances, generating the sine waves, and managing the connection to the destination node.

SineWave = function(context) {

var that = this;

this.x = 0; // Initial sample number

this.context = context;

this.node = context.createJavaScriptNode(1024, 1, 1);

this.node.onaudioprocess = function(e) { that.process(e) };

}

SineWave.prototype.process = function(e) {

var data = e.outputBuffer.getChannelData(0);

for (var i = 0; i < data.length; ++i) {

data[i] = Math.sin(this.x++);

}

}

SineWave.prototype.play = function() {

this.node.connect(this.context.destination);

}

SineWave.prototype.pause = function() {

this.node.disconnect();

}

Upon instantiation, this class creates a

JavaScriptAudioNode and attaches an event handler to

onaudioprocess for buffer refills. The event handler requests a reference to the output buffer for the first channel, and fills it with a sine wave. Notice that the handler does not know the buffer size in advance, and gets it from

data.length.

The buffer is of type

ArrayBuffer which is a JavaScript Typed Array. These arrays allow for high throughput processing of raw binary data. To learn more about Typed Arrays, check out the

Mozilla Developer Documentation on Typed Arrays.

To try out a quick demo of the

SineWave class, add the following code to the

onload handler for your page:

var context = new webkitAudioContext();

var sinewave = new SineWave(context);

sinewave.play();

Notice that sinewave.play() works by wiring up the node to the AudioContext's destination (the speakers). To stop the tone, call sinewave.pause(), which unplugs this connection.

Generating Specific Tones

So, now you have yourself a tone. Are we done yet?

Not quite. How does one know what the frequency of the generated wave is? How does one generate tones of arbitrary frequencies?

To answer these questions, we must find out the sample rate of the audio. Each data value we stuff into the buffer in out handler is a sample, and the sample rate is the number of samples processed per second. We can calculate the frequency of the tone by dividing the sample rate by the length of a full wave cycle.

How do we get the sample rate? Via the getSampleRate() method of AudioContext. On my machine, the default sample rate is 44KHz, i.e., 44100 samples per second. This means that the frequency of the generated tone in our above code is:

freq = context.getSampleRate() / 2 * Math.PI

That's about 7KHz. Ouch! Lets use our newfound knowledge to generate less spine-curdling tones. To generate a tone of a specific frequency, you can change SineWave.process to:

SineWave.prototype.process = function(e) {

var data = e.outputBuffer.getChannelData(0);

for (var i = 0; i < data.length; ++i) {

data[i] = Math.sin(this.x++ / (this.sample_rate / 2 * Math.PI * this.frequency));

}

}

Also make sure you add the following two lines to

SineWave's constructor:

this.sample_rate = this.context.getSampleRate();

this.frequency = 440;

This initializes the frequency to

pitch standard A440, i.e., the A above

middle C.

The Theremin Effect

Now that we can generate tones of arbitrary frequencies, it's only natural that we connect our class to some sort of slider widget so we can experience the entire spectrum right in our browsers. Turns out that

JQueryUI already has such a

slider, leaving us only a little plumbing to do.

We add a setter function to our

SineWave class, and call it from our slider widget's change handler.

SineWave.prototype.setFrequency = function(freq) {

this.next_frequency = freq;

}

A JQueryUI snippet would look like this:

$("#slider").slider({

value: 440,

min: 1,

max: 2048,

slide: function(event, ui) { sinewave.setFrequency(ui.value); }

});

Going up to Eleven

Adding support for volume is straightforward. Add an amplitude member to the

SineWave constructor along with a setter method, just like we did for frequency, and change

SineWave.process to:

SineWave.prototype.process = function(e) {

var data = e.outputBuffer.getChannelData(0);

for (var i = 0; i < data.length; ++i) {

data[i] = this.amplitude * Math.sin(this.x++ / (this.sample_rate / 2 * Math.PI * this.frequency));

}

}

Folks, we now have a full fledged sine wave generator!

Boo Hiss Crackle

But, we're not done yet. You've probably noticed that changing the frequency causes mildly annoying crackling sounds. This happens because when the frequency changes, discontinuity occurs in the wave, causing a high-frequency

pop in the audio stream.

|

| Discontinuity when Changing Frequencies |

We try to eliminate the discontinuity by only shifting frequencies when the cycle of the previous frequency completes, i.e., the sample value is (approximately) zero. (There are better ways to do this, e.g., windowing, LPFs, etc., but these techniques are out of the scope of this tutorial.)

Although this complicates the code a little bit, waiting for the cycle to end significantly reduces the noise upon frequency shifts.

SineWave.prototype.setFrequency = function(freq) {

this.next_frequency = freq;

}

SineWave.prototype.process = function(e) {

// Get a reference to the output buffer and fill it up.

var data = e.outputBuffer.getChannelData(0);

// We need to be careful about filling up the entire buffer and not

// overflowing.

for (var i = 0; i < data.length; ++i) {

data[i] = this.amplitude * Math.sin(

this.x++ / (this.sampleRate / (this.frequency * 2 * Math.PI)));

// This reduces high-frequency blips while switching frequencies. It works

// by waiting for the sine wave to hit 0 (on it's way to positive territory)

// before switching frequencies.

if (this.next_frequency != this.frequency) {

// Figure out what the next point is.

next_data = this.amplitude * Math.sin(

this.x / (this.sampleRate / (this.frequency * 2 * Math.PI)));

// If the current point approximates 0, and the direction is positive,

// switch frequencies.

if (data[i] < 0.001 && data[i] > -0.001 && data[i] < next_data) {

this.frequency = this.next_frequency;

this.x = 0;

}

}

}

}

The End

Comments, criticism, and error reports welcome. Enjoy!